Finds Pornstars That Look Like People You Know

What is a deepfake?

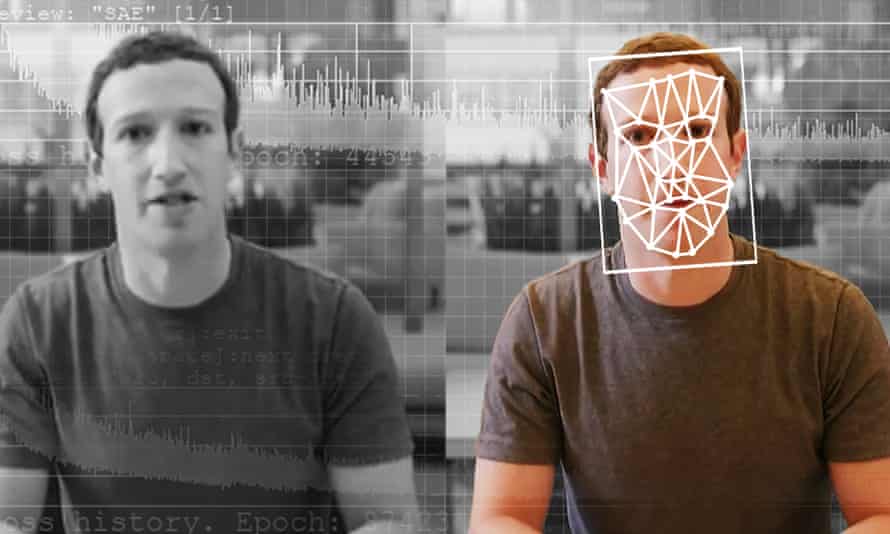

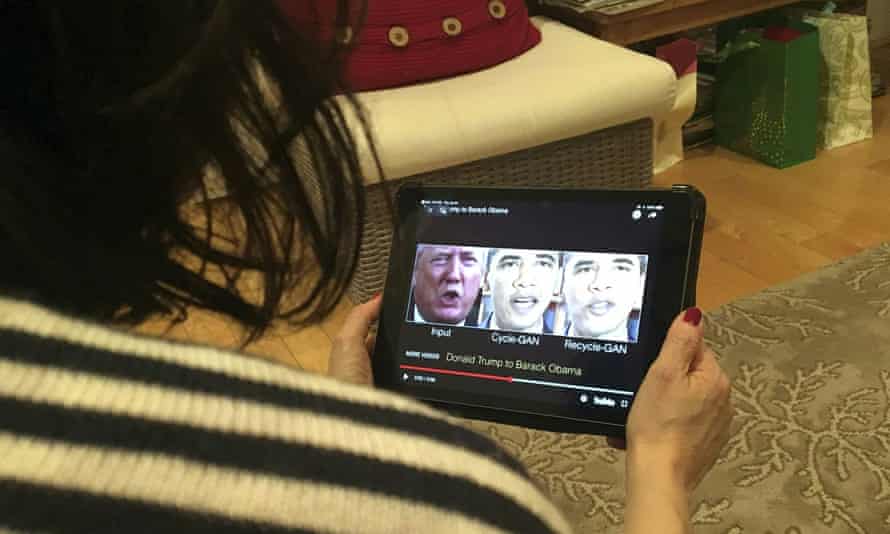

Have you seen Barack Obama call Donald Trump a "consummate dipshit", or Mark Zuckerberg brag about having "total control of billions of people'south stolen information", or witnessed Jon Snow's moving amends for the dismal ending to Game of Thrones? Answer yes and you've seen a deepfake. The 21st century'south reply to Photoshopping, deepfakes utilise a grade of artificial intelligence called deep learning to make images of fake events, hence the proper name deepfake. Desire to put new words in a politician's mouth, star in your favourite motion picture, or dance similar a pro? So it's time to make a deepfake.

What are they for?

Many are pornographic. The AI firm Deeptrace plant 15,000 deepfake videos online in September 2019, a about doubling over 9 months. A staggering 96% were pornographic and 99% of those mapped faces from female celebrities on to porn stars. As new techniques permit unskilled people to brand deepfakes with a handful of photos, fake videos are likely to spread beyond the celebrity world to fuel revenge porn. Equally Danielle Citron, a professor of law at Boston University, puts information technology: "Deepfake technology is being weaponised against women." Beyond the porn at that place'south plenty of spoof, satire and mischief.

Is it but about videos?

No. Deepfake technology tin can create convincing but entirely fictional photos from scratch. A non-existent Bloomberg journalist, "Maisy Kinsley", who had a profile on LinkedIn and Twitter, was probably a deepfake. Another LinkedIn fake, "Katie Jones", claimed to piece of work at the Center for Strategic and International Studies, just is thought to be a deepfake created for a foreign spying operation.

Audio can exist deepfaked too, to create "vocalisation skins" or "voice clones" of public figures. Last March, the master of a UK subsidiary of a German language energy firm paid nearly £200,000 into a Hungarian depository financial institution account subsequently being phoned by a fraudster who mimicked the High german CEO's vocalization. The company's insurers believe the vox was a deepfake, simply the evidence is unclear. Like scams have reportedly used recorded WhatsApp voice messages.

How are they made?

University researchers and special effects studios have long pushed the boundaries of what'southward possible with video and image manipulation. But deepfakes themselves were born in 2017 when a Reddit user of the aforementioned name posted doctored porn clips on the site. The videos swapped the faces of celebrities – Gal Gadot, Taylor Swift, Scarlett Johansson and others – on to porn performers.

It takes a few steps to make a confront-swap video. First, you run thousands of confront shots of the two people through an AI algorithm chosen an encoder. The encoder finds and learns similarities between the two faces, and reduces them to their shared mutual features, compressing the images in the process. A second AI algorithm chosen a decoder is and then taught to recover the faces from the compressed images. Because the faces are different, you train 1 decoder to recover the first person's face up, and another decoder to recover the second person's confront. To perform the face swap, you simply feed encoded images into the "wrong" decoder. For case, a compressed epitome of person A's face up is fed into the decoder trained on person B. The decoder and then reconstructs the face up of person B with the expressions and orientation of face up A. For a disarming video, this has to be washed on every frame.

Another way to make deepfakes uses what's called a generative adversarial network, or Gan. A Gan pits ii artificial intelligence algorithms against each other. The commencement algorithm, known as the generator, is fed random noise and turns it into an epitome. This synthetic paradigm is so added to a stream of real images – of celebrities, say – that are fed into the second algorithm, known equally the discriminator. At first, the synthetic images will look nothing like faces. Merely repeat the process countless times, with feedback on performance, and the discriminator and generator both improve. Given enough cycles and feedback, the generator will start producing utterly realistic faces of completely nonexistent celebrities.

Who is making deepfakes?

Everyone from academic and industrial researchers to amateur enthusiasts, visual effects studios and porn producers. Governments might be dabbling in the technology, too, as part of their online strategies to discredit and disrupt extremist groups, or make contact with targeted individuals, for instance.

What engineering science practise you need?

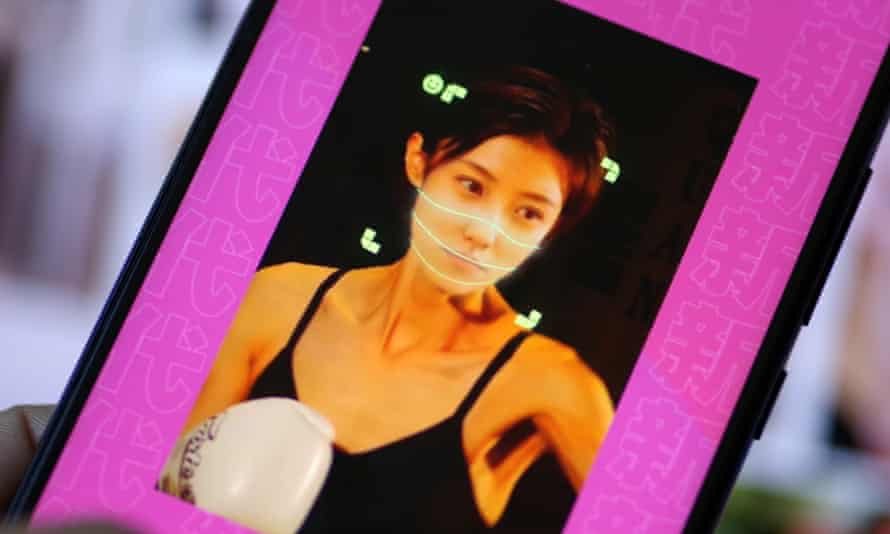

It is hard to make a good deepfake on a standard reckoner. Most are created on loftier-finish desktops with powerful graphics cards or meliorate still with computing power in the cloud. This reduces the processing time from days and weeks to hours. But it takes expertise, too, non least to touch upwards completed videos to reduce flicker and other visual defects. That said, enough of tools are now available to assistance people brand deepfakes. Several companies will brand them for you lot and exercise all the processing in the cloud. There's even a mobile phone app, Zao, that lets users add their faces to a list of Tv and picture show characters on which the system has trained.

How do you lot spot a deepfake?

Information technology gets harder as the applied science improves. In 2018, US researchers discovered that deepfake faces don't glimmer unremarkably. No surprise at that place: the bulk of images show people with their eyes open, and so the algorithms never really learn most blinking. At first, information technology seemed like a silver bullet for the detection problem. Only no sooner had the research been published, than deepfakes appeared with blinking. Such is the nature of the game: equally soon as a weakness is revealed, it is fixed.

Poor-quality deepfakes are easier to spot. The lip synching might be bad, or the pare tone patchy. There can exist flickering around the edges of transposed faces. And fine details, such as pilus, are particularly difficult for deepfakes to render well, especially where strands are visible on the fringe. Badly rendered jewellery and teeth can also be a giveaway, equally can strange lighting effects, such as inconsistent illumination and reflections on the iris.

Governments, universities and tech firms are all funding inquiry to find deepfakes. Concluding month, the first Deepfake Detection Challenge kicked off, backed by Microsoft, Facebook and Amazon. It will include research teams around the earth competing for supremacy in the deepfake detection game.

Facebook terminal week banned deepfake videos that are likely to mislead viewers into thinking someone "said words that they did not really say", in the run-up to the 2020 U.s.a. election. Even so, the policy covers just misinformation produced using AI, meaning "shallowfakes" (run across below) are still allowed on the platform.

Will deepfakes wreak havoc?

Nosotros can expect more than deepfakes that harass, intimidate, demean, undermine and destabilise. But will deepfakes spark major international incidents? Hither the situation is less clear. A deepfake of a world leader pressing the big red button should not cause armageddon. Nor will deepfake satellite images of troops massing on a edge cause much trouble: nearly nations have their own reliable security imaging systems.

In that location is withal ample room for mischief-making, though. Last twelvemonth, Tesla stock crashed when Elon Musk smoked a joint on a live web show. In December, Donald Trump flew home early from a Nato meeting when genuine footage emerged of other world leaders obviously mocking him. Volition plausible deepfakes shift stock prices, influence voters and provoke religious tension? It seems a safety bet.

Volition they undermine trust?

The more insidious impact of deepfakes, along with other synthetic media and imitation news, is to create a zero-trust order, where people cannot, or no longer bother to, distinguish truth from falsehood. And when trust is eroded, information technology is easier to raise doubts near specific events.

Last yr, Republic of cameroon's minister of communication dismissed as imitation news a video that Amnesty International believes shows Cameroonianthe country's soldiers executing civilians.

Donald Trump, who admitted to boasting about grabbing women'due south genitals in a recorded conversation, later suggested the record was not real. In Prince Andrew'southward BBC interview with Emily Maitlis, the prince bandage doubt on the authenticity of a photo taken with Virginia Giuffre, a shot her attorney insists is genuine and unaltered.

"The problem may not be and so much the faked reality every bit the fact that existent reality becomes plausibly deniable," says Prof Lilian Edwards, a leading expert in internet law at Newcastle University.

As the technology becomes more attainable, deepfakes could mean problem for the courts, particularly in kid custody battles and employment tribunals, where faked events could be entered as testify. Simply they also pose a personal security run a risk: deepfakes tin can mimic biometric information, and tin can potentially pull a fast one on systems that rely on face, vocalisation, vein or gait recognition. The potential for scams is clear. Phone someone out of the blue and they are unlikely to transfer money to an unknown bank account. But what if your "mother" or "sister" sets upward a video call on WhatsApp and makes the same request?

What's the solution?

Ironically, AI may be the respond. Artificial intelligence already helps to spot fake videos, but many existing detection systems take a serious weakness: they piece of work all-time for celebrities, considering they tin train on hours of freely bachelor footage. Tech firms are now working on detection systems that aim to flag upwards fakes whenever they announced. Another strategy focuses on the provenance of the media. Digital watermarks are not foolproof, but a blockchain online ledger system could hold a tamper-proof record of videos, pictures and audio so their origins and any manipulations can always be checked.

Are deepfakes always malicious?

Not at all. Many are entertaining and some are helpful. Voice-cloning deepfakes can restore people's voices when they lose them to disease. Deepfake videos can enliven galleries and museums. In Florida, the Dalí museum has a deepfake of the surrealist painter who introduces his art and takes selfies with visitors. For the entertainment industry, applied science tin be used to improve the dubbing on strange-linguistic communication films, and more controversially, resurrect dead actors. For example, the late James Dean is due to star in Finding Jack, a Vietnam war movie.

What about shallowfakes?

Coined past Sam Gregory at the human rights organisation Witness, shallowfakes are videos that are either presented out of context or are doctored with uncomplicated editing tools. They are crude but undoubtedly impactful. A shallowfake video that slowed down Nancy Pelosi'due south voice communication and fabricated the US Speaker of the House sound slurred reached millions of people on social media.

In another incident, Jim Acosta, a CNN correspondent, was temporarily banned from White House press briefings during a heated substitution with the president. A shallowfake video released afterwards appeared to show him making contact with an intern who tried to take the microphone off him. It later emerged that the video had been sped up at the crucial moment, making the motion look aggressive. Costa's press pass was later reinstated.

The UK'due south Bourgeois political party used like shallowfake tactics. In the run-upwardly to the contempo ballot, the Conservatives doctored a TV interview with the Labour MP Keir Starmer to make information technology seem that he was unable to answer a question about the party's Brexit stance. With deepfakes, the mischief-making is only likely to increment. As Henry Ajder, head of threat intelligence at Deeptrace, puts it: "The world is becoming increasingly more than synthetic. This technology is non going away."

Source: https://www.theguardian.com/technology/2020/jan/13/what-are-deepfakes-and-how-can-you-spot-them

0 Response to "Finds Pornstars That Look Like People You Know"

Post a Comment